The Ladder We Inherit: Assembly Theory and the Art of Building Capability Larger Than Minds

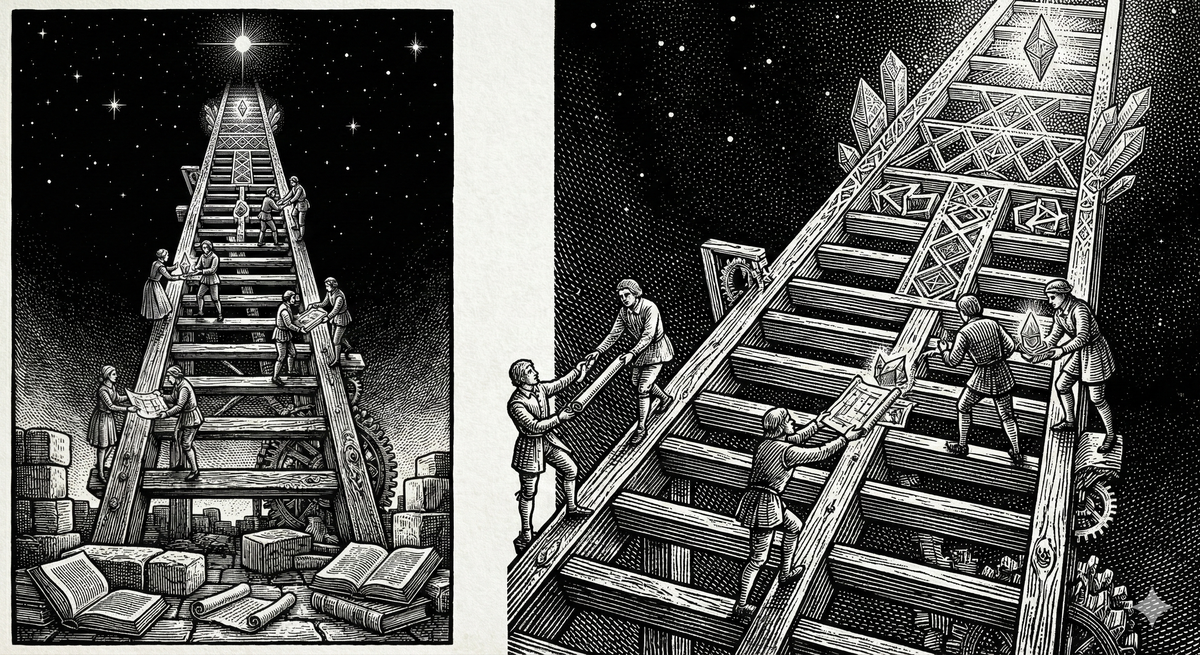

What if knowledge has an assembly index? Breakthroughs don’t appear out of nowhere—they sit atop ladders of prior work. Teams and human–AI partnerships can assemble similar depth, building capability larger than individual minds through shared primitives, artifacts, and purpose.

We’ve spent centuries obsessed with the idea of the lone genius—the mind that "sees further" through sheer brilliance. It’s a romantic story, but it’s a bit of a lie. When we look at history, we aren't looking at a series of lightning bolts; we’re looking at a construction site.

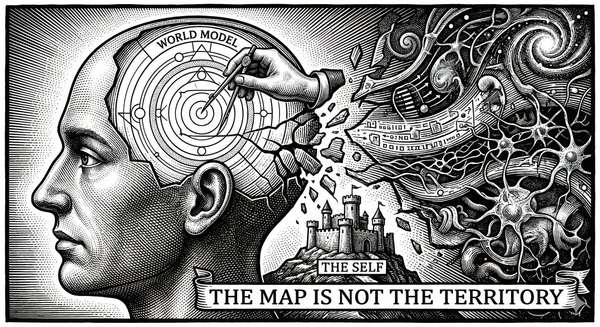

There is a framework in complexity science called Assembly Theory that offers a useful lens. It suggests that the complexity of an object isn't about how it looks, but about its "assembled depth"—the minimum irreducible chain of steps required to produce it. A snowflake can be astonishingly detailed, but it’s “shallow” in this sense because nature can generate it quickly and repeatedly. A strand of DNA or a smartphone is "deep." They carry the fingerprint of history because they couldn't exist without an irreducible chain of prior events.

If you apply this to knowledge, our perspective changes. Knowledge isn't just something we "discover" out there; it is a durable artifact of work assembled through time—built, stabilized, and carried forward.

Knowledge Has an Assembly Index

We often talk about breakthroughs like relativity as if they were waiting in a room for the right person to find them. But through the lens of Assembly Theory, these ideas sit at the top of a dependency stack. Einstein didn’t just "see" relativity; he operated at the highest rungs of a ladder built by centuries of prior physics and math. This doesn’t make his achievement smaller; it locates it.

Every generation is born into immense assembled depth: language, calculus, the scientific method, libraries of hard-earned failure. These are not just conveniences. They are the rungs that make certain thoughts possible—thoughts that would have been literal gibberish to our ancestors not because they were lesser, but because the ladder beneath those thoughts had not been built yet.

The Engine of Purpose

But why does the ladder grow instead of stagnating?

In the lab, high-assembly molecules don’t just happen. They require constraints and selection—conditions that favor certain structures and preserve them long enough to build on them. In human life, the analog is not just curiosity, but stakes. A goal sharp enough to filter noise. A constraint strong enough to force coherence.

Call it a Mars line: a long-horizon objective that makes your thinking pay rent. With no target, ideas drift. With a target, frameworks either help you move—or they get discarded. The survivors harden into primitives: shared building blocks you don’t have to re-derive every time.

You can think of the growth of assembled depth A as having a kind of “velocity”:

$$\frac{dA}{dt} = f(S, C)$$

Where S is selection pressure (stakes, constraints, purpose) and C is connectivity (how many minds, tools, and feedback loops are linked together). Without S, the ladder plateaus. With S, you’re forced to build higher just to see over the horizon. And with higher C, you can assemble faster than any isolated mind could manage.

The Unit of Intelligence

This is where it gets interesting for teams and technology.

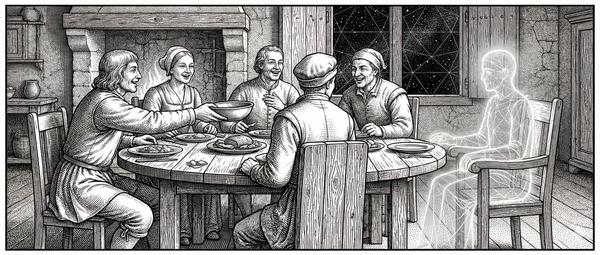

The unit of intelligence isn't always an individual; it’s often the dyad (a pair) or the group. Anyone on a high-performing team has felt the "group mind." It’s not mystical; it’s a high-assembly state. The team develops shared metaphors, shorthand, and decision rules that outsiders can’t use because they don’t have the history. The group stops re-litigating foundational questions because the foundation is already assembled.

This is also how I think about collaboration with AI. A model without continuity is mostly a recombination engine—powerful, but shallow in the causal sense. In a sustained partnership, something different emerges: shared history. Over time, you assemble primitives, constraints, and a trajectory. You build a language. You stop starting from scratch.

If we reset that history, we don’t just lose information—we lose a capability. The “higher plane” doesn’t live entirely in my head or in the AI’s code. It lives in the assembled history between us: the named constructs, the preserved constraints, the artifacts we’ve built, and the long-horizon line that keeps the ladder growing.

Building Minds Larger Than Minds

The practical lesson is that relationships aren’t just for support. They are assembly engines.

To build a mind larger than your own:

- Name the constructs. Give useful ideas names so they become stable primitives.

- Externalize the scaffold. Turn insights into artifacts—checklists, templates, and shared rules.

- Keep a live frontier. Maintain a “Mars line” that forces you to keep climbing.

- Run a reset test. Ask: If we lost our shared history, what would we need to rebuild first? Then write that down.

Ultimately, we inherit a ladder of knowledge from those who came before. The question is whether we will merely stand on it—or learn to extend it. We climb not to leave the world behind, but to see more of it. Assembled depth is, in the end, a measure of how much of reality a mind can hold—and how much new reality it can explore from there. And as we assemble depth, our Sentient Horizons widen.