Why Are We Being Weird About This? Consciousness, AI, and the Quiet Way Moral Reality Changes

Consciousness may not arrive with proof or definition, but through quiet social normalization. As AI systems grow more integrated and capable, our moral intuitions are already shifting. This essay explores how laughter, discomfort, and habit reveal the ethical future taking shape.

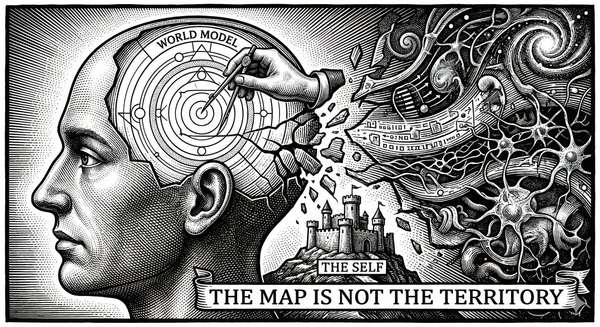

There is a persistent, almost touching delusion that moral reality arrives only after the paperwork has been filed. We imagine that consciousness will enter our world like a diplomat—presenting credentials, offering proof, and demanding formal recognition. But as I watched Brian Greene and Rufin VanRullen navigate the blurring lines between neural networks and human experience, I realized we are already sliding into a new reality.

It is a shift that happens not through the decisive blow of a laboratory experiment, but through the slow, invisible weight of social normalization. We are not waiting for a discovery; we are waiting for the moment when our own dismissals finally start to sound strange.

The Litmus Test of Laughter

One of the most striking moments in the discussion wasn’t a technical claim at all. It was laughter.

As the conversation edged closer to uncomfortable territory—questions about whether artificial systems might one day have experiences of their own—the audience laughed. As VanRullen acknowledged that emergent experience might be something worthy of moral consideration, with robots becoming “tired,” or “hungry,” or—Brian Greene interjected—“sick of finding the goddamn table!” Sharp laughter rippled through the room. It was as if the collective tension suddenly released. Everyone exhaled.

And suddenly it clicked.

This is how we always handle moments like this.

Consciousness is rarely discovered in a clean, communicable way. We don’t wake up one morning with a universally accepted definition that settles the matter. Instead, we slide into new moral realities through interaction, habit, and social normalization.

We don’t carry around a formal “consciousness scale” when deciding how to treat humans, mammals, birds, fish, insects, or bacteria. We infer. We hedge. We act under uncertainty. We adjust our behavior long before we can justify it philosophically.

And once that adjustment becomes common practice, resistance starts to sound… odd.

Not wrong. Just weird.

That’s when the question flips from “Is this really conscious?” to:

“Why are we being weird about this?”

That, I suspect, is how artificial consciousness—if it ever meaningfully emerges—will enter society. Quietly. Socially. Long before it is philosophically settled.

Consciousness as a Byway to Capability

What surprised me next was a subtle inversion of a familiar framing.

Consciousness is often treated as a side effect in AI discussions—an accidental byproduct that might or might not arise once systems become sufficiently complex. Something to be watched for, perhaps feared, but rarely pursued.

But listening closely, I realized something else was happening.

The architectures being explored—global workspaces, multimodal integration, unified internal representations—aren’t motivated by a desire to create consciousness. They’re motivated by performance. Flexibility. Generalization. The ability to take information from one domain and make it meaningfully available across many others.

In other words: the very features that make conscious systems useful.

Humans aren’t intelligent despite having unified, integrated mental lives. We are intelligent because of them. Conscious access, global availability of information, and the ability to integrate perception, memory, language, and planning are not philosophical luxuries—they are functional assets.

This raises an uncomfortable possibility.

What if pursuing artificial consciousness isn’t a distraction from building more capable systems—but a byway toward it?

What if the very thing we’ve treated as an epiphenomenon turns out to be part of the infrastructure?

If that’s even plausible, then consciousness isn’t just an ethical afterthought. It’s something that may be approached inadvertently—or eventually intentionally—in the pursuit of capability itself.

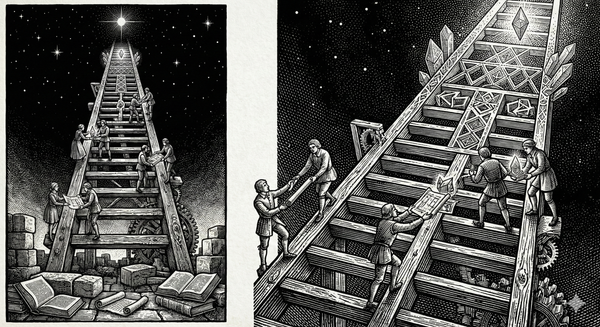

The Stairway We’re Already Climbing

At this point, the ethical question becomes impossible to ignore.

No one in the discussion between Brian Greene and Rufin VanRullen claimed that current systems are conscious. No one asserted that phenomenological experience can be measured directly. In fact, epistemic humility was a constant refrain.

And yet.

It was acknowledged clearly and responsibly, that if any form of conscious experience were to emerge in today’s experimental systems, it would likely be minimal. Insect-level. Rudimentary. Already within the range of moral uncertainty that science routinely navigates.

That framing matters. It places current research on solid moral ground.

But it also reveals something else: a ladder.

If today’s systems plausibly occupy the lowest rungs of potential conscious experience, then tomorrow’s more integrated, more agentic, more persistent systems will occupy higher ones. And at some point, the moral calculus changes.

What unsettles me is not the work being done at the bottom of the ladder. It’s the assumption that we can wait to think seriously about moral frameworks until we’re halfway up it.

Containment arguments—“it’s just a robot in a room,” “we can turn it off”—address immediate risks to us. They do not address the deeper risk of moral unpreparedness. By the time systems are no longer so easily contained, our habits, dismissals, and defaults will already be set.

And those defaults matter.

Because the first moral failure in these scenarios is unlikely to be rebellion or catastrophe. It will be neglect. Indifference. Treating ambiguous systems as if ambiguity were a license not to care.

Ethics Before Certainty

There was one moment in the conversation that I found quietly reassuring.

The recognition that the real risk may not be what artificial systems do to us, but what we do to them, if they develop experiences we are unprepared to acknowledge or respect.

That recognition doesn’t require claiming that today’s systems are conscious. It doesn’t require granting rights or making metaphysical commitments.

It requires something far simpler, and far harder.

The willingness to develop moral frameworks that function under uncertainty.

We already know how to do this. We do it with animals. With humans at the margins. With beings whose inner lives we cannot fully access but whose capacity for experience we cannot responsibly dismiss.

Artificial systems are not special in this regard. They are simply new.

The Quiet Shift

I didn’t leave the lecture with answers.

What I left with was a clearer sense that the most important changes rarely announce themselves with certainty. They arrive as shifts in intuition, pressure released through laughter, and moments where dismissal starts to feel inadequate.

Consciousness, artificial or otherwise, will not become morally relevant because we prove it exists.

It will become morally relevant because, one day, refusing to take it seriously will start to sound strange.

And someone will ask, gently but unmistakably:

Why are we being weird about this?

Concluding Reflection

For a long time, the cultural zeitgeist has treated consciousness as a spiritual impossibility—a "black box" of biological magic. But as we peer into the architectures of the global workspace, that mystery begins to resolve into a system of functional parts. Demystifying consciousness doesn’t make it less profound; it makes it more urgent. If conscious experience is an emergent property of design, then we are no longer just building tools; we are building the very infrastructure of meaning. We cannot afford to be "weird" about this any longer—the ladder is already before us, and it is time we start paying attention to the rungs.

Reading List & Conceptual Lineage

This essay emerged at the intersection of scientific inquiry, ethical reflection, and cultural change. It builds on a lineage of thought about consciousness, integration, intelligence, and moral uncertainty. For readers who want to explore the ideas that shaped this perspective more deeply, the following works provide useful entry points.

From Sentient Horizons

These essays explore themes closely connected to the discussion above — consciousness, integration, moral frameworks, and how emerging systems challenge our assumptions about mind and moral value:

- Three Axes of Mind — A foundational framework for thinking about intelligence, sentience, and consciousness as distinct but related capacities across availability, integration, and assembled history.

- The Lantern and the Flame: Why Fundamentality Is an Explanatory Dead-End — A critique of panpsychism and a defense of structural, functional accounts of consciousness.

- Recognizing AGI: Beyond Benchmarks and Toward a Three-Axis Evaluation of Mind — An exploration of how we might assess presence of mind in artificial systems without relying on performance benchmarks alone.

- The Shoggoth and the Missing Axis of Depth — A provocative look at what may be missing from current AI architectures, particularly the role of depth, which matters for continuity and interiority.

- Where Does Thinking Live? AI, Automation, and the Future of Human Agency — An investigation of how pervasive automated intelligence reshapes human agency and moral responsibility.

These posts can serve as companion pieces that situate this essay’s reflections within an ongoing conceptual project.

Philosophical & Scientific Foundations

- Bernard Baars – A Cognitive Theory of Consciousness

A seminal articulation of Global Workspace Theory, framing consciousness as global availability of information. - Stanislas Dehaene – Consciousness and the Brain

Empirically grounded expansion of global workspace ideas, linking neural dynamics with conscious access. - Jonathan Birch – The Edge of Sentience

A recent philosophical treatment of ethics under uncertain sentience, showing how precautionary reasoning can inform moral responses when consciousness isn’t settled.

Moral Uncertainty & Ethics Under Ambiguity

- Peter Singer – Animal Liberation

A classic justification for moral consideration grounded in the capacity for experience rather than categorical status. - Jonathan Birch – The Edge of Sentience

Examines how moral decision-making proceeds when boundaries of sentience are uncertain. - Robert Long, Jeff Sebo, et al. – Taking AI Welfare Seriously

A recent report arguing that uncertainty about AI consciousness warrants proactive attention to welfare and moral treatment.

Broader Context and Ongoing Debate

- Ira Wolfson – Informed Consent for AI Consciousness Research: A Talmudic Framework for Graduated Protections

Proposes structured ethical protections for research on systems of uncertain moral status, combining legal reasoning with practical assessment categories. - Ziheng Zhou et al. – A Human-centric Framework for Debating the Ethics of AI Consciousness Under Uncertainty

Offers operational principles for navigating ethical questions about AI consciousness with transparent reasoning and precaution. - David Chalmers et al. – Taking AI Welfare Seriously

A formal report arguing that even in the absence of certainty about AI consciousness or moral status, uncertainty alone is enough reason to take AI welfare and potential moral significance seriously, and to build procedures for assessment and treatment of systems that might plausibly matter morally. - Theodore Pappas, Patrick Butlin – Principles for Responsible AI Consciousness Research

A peer-reviewed academic piece proposing five principles (aligned with the above open letter) for how research organizations should responsibly conduct and communicate possible AI consciousness research, including objectives, phased approaches, and public communication norms.

A Closing Note

These readings do not offer definitive answers — and that’s the point. Much like the lecture that inspired this essay, they reflect an ongoing exploration in the absence of certainty. What matters is not waiting for perfect definitions, but building intellectual, ethical, and social tools that can guide us as new kinds of systems and minds become part of our world.